This is a quick reference on passing variables between multiple tasks in Azure Pipelines, a popular CI/CD platform. They have recently enabled support for multi-stage pipelines defined in YAML documents, allowing the creation of both build and release (CI and CD) pipelines, in a single azure-pipelines.yaml file. This is very powerful, as it lets developers define their pipelines to continuously build and deploy apps, using a declarative syntax, and storing the YAML document in the same repo as their code, versioned.

One recurrent question is: how do you pass variables around tasks? While passing variables from a step to another within the same job is relatively easy, sharing state and variables with tasks in other jobs or even stages isn’t immediate.

The examples below are about using multi-stage pipelines within YAML documents. I’ll focus on pipelines running on Linux, and all examples show bash scripts. The same concepts would apply to developers working with PowerShell or Batch scripts, although the syntax of the commands will be slightly different. The work below is based on the official documentation, adding some examples and explaining how to pass variables between stages.

Passing variables between tasks in the same job

This is the easiest one. In a script task, you need to print a special value to STDOUT that will be captured by Azure Pipelines to set the variable.

For example, to pass the variable FOO between scripts:

- Set the value with the command

echo "##vso[task.setvariable variable=FOO]some value" - In subsequent tasks, you can use the

$(FOO)syntax to have Azure Pipelines replace the variable withsome value - Alternatively, in the following scripts tasks,

FOOis also set as environmental variable and can be accessed as$FOO

Full pipeline example:

steps:

# Sets FOO to be "some value" in the script and the next ones

- bash: |

FOO="some value"

echo "##vso[task.setvariable variable=FOO]$FOO"

# Using the $() syntax, the value is replaced inside Azure Pipelines before being submitted to the script task

- bash: echo "$(FOO)"

# The same variable is also present as environmental variable in scripts; here the variable expansion happens within bash

- bash: echo "$FOO"

You can also use the $(FOO) syntax inside task definitions. For example, these steps copy files to a folder whose name is defined as variable:

pool:

vmImage: 'Ubuntu-16.04'

steps:

- bash: echo "##vso[task.setvariable variable=TARGET_FOLDER]$(Pipeline.Workspace)/target"

- task: CopyFiles@2

inputs:

sourceFolder: $(Build.SourcesDirectory)

# Note the use of the variable TARGET_FOLDER

targetFolder: $(TARGET_FOLDER)/myfolder

Wondering why the

vsolabel? That’s a legacy identifier from when Azure Pipelines used to be part of Visual Studio Online, before being rebranded Visual Studio Team Services, and finally Azure DevOps!

Passing variables between jobs

Passing variables between jobs in the same stage is a bit more complex, as it requires working with output variables.

Similarly to the example above, to pass the FOO variable:

- Make sure you give a name to the job, for example

job: firstjob - Likewise, make sure you give a name to the step as well, for example:

name: mystep - Set the variable with the same command as before, but adding

;isOutput=true, like:echo "##vso[task.setvariable variable=FOO;isOutput=true]some value" - In the second job, define a variable at the job level, giving it the value

$[ dependencies.firstjob.outputs['mystep.FOO'] ](remember to use single quotes for expressions)

A full example:

jobs:

- job: firstjob

pool:

vmImage: 'Ubuntu-16.04'

steps:

# Sets FOO to "some value", then mark it as output variable

- bash: |

FOO="some value"

echo "##vso[task.setvariable variable=FOO;isOutput=true]$FOO"

name: mystep

# Show output variable in the same job

- bash: echo "$(mystep.FOO)"

- job: secondjob

# Need to explicitly mark the dependency

dependsOn: firstjob

variables:

# Define the variable FOO from the previous job

# Note the use of single quotes!

FOO: $[ dependencies.firstjob.outputs['mystep.FOO'] ]

pool:

vmImage: 'Ubuntu-16.04'

steps:

# The variable is now available for expansion within the job

- bash: echo "$(FOO)"

# To send the variable to the script as environmental variable, it needs to be set in the env dictionary

- bash: echo "$FOO"

env:

FOO: $(FOO)

Passing variables between stages

At this time, it’s not possible to pass variables between different stages. There is, however, a workaround that involves writing the variable to disk and then passing it as a file, leveraging pipeline artifacts.

To pass the variable FOO from a job to another one in a different stage:

- Create a folder that will contain all variables you want to pass; any folder could work, but something like

mkdir -p $(Pipeline.Workspace)/variablesmight be a good idea. - Write the contents of the variable to a file, for example

echo "$FOO" > $(Pipeline.Workspace)/variables/FOO. Even though the name could be anything you’d like, giving the file the same name as the variable might be a good idea. - Publish the

$(Pipeline.Workspace)/variablesfolder as a pipeline artifact namedvariables - In the second stage, download the

variablespipeline artifact - Read each file into a variable, for example

FOO=$(cat $(Pipeline.Workspace)/variables/FOO) - Expose the variable in the current job, just like we did in the first example:

echo "##vso[task.setvariable variable=FOO]$FOO" - You can then access the variable by expanding it within Azure Pipelines (

$(FOO)) or use it as an environmental variable inside a bash script ($FOO).

Example:

stages:

- stage: firststage

jobs:

- job: firstjob

pool:

vmImage: 'Ubuntu-16.04'

steps:

# To pass the variable FOO, write it to a file

# While the file name doesn't matter, naming it like the variable and putting it inside the $(Pipeline.Workspace)/variables folder could be a good pattern

- bash: |

FOO="some value"

mkdir -p $(Pipeline.Workspace)/variables

echo "$FOO" > $(Pipeline.Workspace)/variables/FOO

# Publish the folder as pipeline artifact

- publish: $(Pipeline.Workspace)/variables

artifact: variables

- stage: secondstage

jobs:

- job: secondjob

pool:

vmImage: 'Ubuntu-16.04'

steps:

# Download the artifacts

- download: current

artifact: variables

# Read the variable from the file, then expose it in the job

- bash: |

FOO=$(cat $(Pipeline.Workspace)/variables/FOO)

echo "##vso[task.setvariable variable=FOO]$FOO"

# Just like in the first example, we can expand the variable within Azure Pipelines itself

- bash: echo "$(FOO)"

# Or we can expand it within bash, reading it as environmental variable

- bash: echo "$FOO"

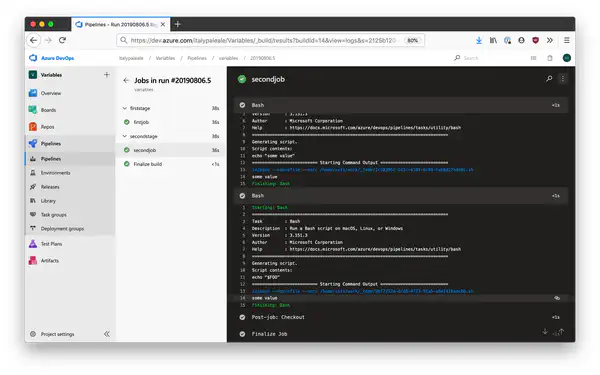

Here’s the pipeline running. Note in the second stage how line #14 shows some value in both bash scripts. However, take a look at the script being executed on line #11: in the first case, the variable was expanded inside Azure Pipelines (so the script became echo "some value"), while in the second one bash is reading an environmental variable (the script remains echo "$FOO").

If you want to pass more than one variable, you can create multiple files within the $(Pipeline.Workspace)/variables (e.g. for a variable named MYVAR, write it inside $(Pipeline.Workspace)/variables/MYVAR), then read all the variables in the second stage.